The SensorFusion API from PhysioSense is designed to make physiological signal interpretation as fast, accurate, and accessible as possible. Whether you’re working with previously captured sensor data or processing live streams from wearable devices, SensorFusion offers a simple yet powerful REST-based interface to convert raw signals into meaningful values. Supporting common modalities such as PPG, ECG, and other biosignals, this API streamlines the transformation of noisy data into clinically or commercially actionable features using cutting-edge artificial intelligence and machine learning.

Using the SensorFusion API is straightforward: you send one or more signal segments—along with a few specifications—via a secure HTTP request. In response, you receive cleaned, filtered data and derived values such as waveform features or high-level physiological interpretations, depending on your selected endpoint. This lets you bypass the time-consuming steps of pre-processing and focus directly on model development, insight generation, or application deployment.

At the heart of SensorFusion is a robust signal processing engine that performs real-time filtering, artifact rejection, and segmentation of individual waveforms. These waveforms are then validated using trained models to ensure signal integrity before extracting meaningful features. These features can be directly used in your own machine learning pipelines. Alternatively, the API can return outputs from PhysioSense’s proprietary Foundational AI models—perfect for when you want to utilize the interpretative power of AI without the high data requirements of neural networks.

Here’s a quick example of how easily the SensorFusion REST API can be called in Python to generate ML training data from raw signal data.

# Get sensor fusion values AI Foundational parameters for each segment

for subject_id, subject_data in ppg_data.items():

# Call SensorFusion REST API to translate ppg data to interpretable values

response = requests.post("http://physiosense_api_url/sensorfusion", json={"data": {"raw_signal": subject_data['ppg'], "frequency": 50, "model": {"name": "fusion-ml","version": "3.4.2"}}}).json()

# add SensorFusion values to traning data

for segment in response['fusionCoreData']:

for waveform_core_values in segment:

xtrain.append(waveform_core_values)

ytrain.append(subject_data['age'])In this example, the source dataset contains multiple raw PPG signal segments per subject along with their age. The xtrain values are set to the interpretable values returned by the PhysioSense SensorFusion API, and the ytrain values are set to the subject’s age. In this example, this training data can then be easily used to create various AI or ML models. It’s that easy!

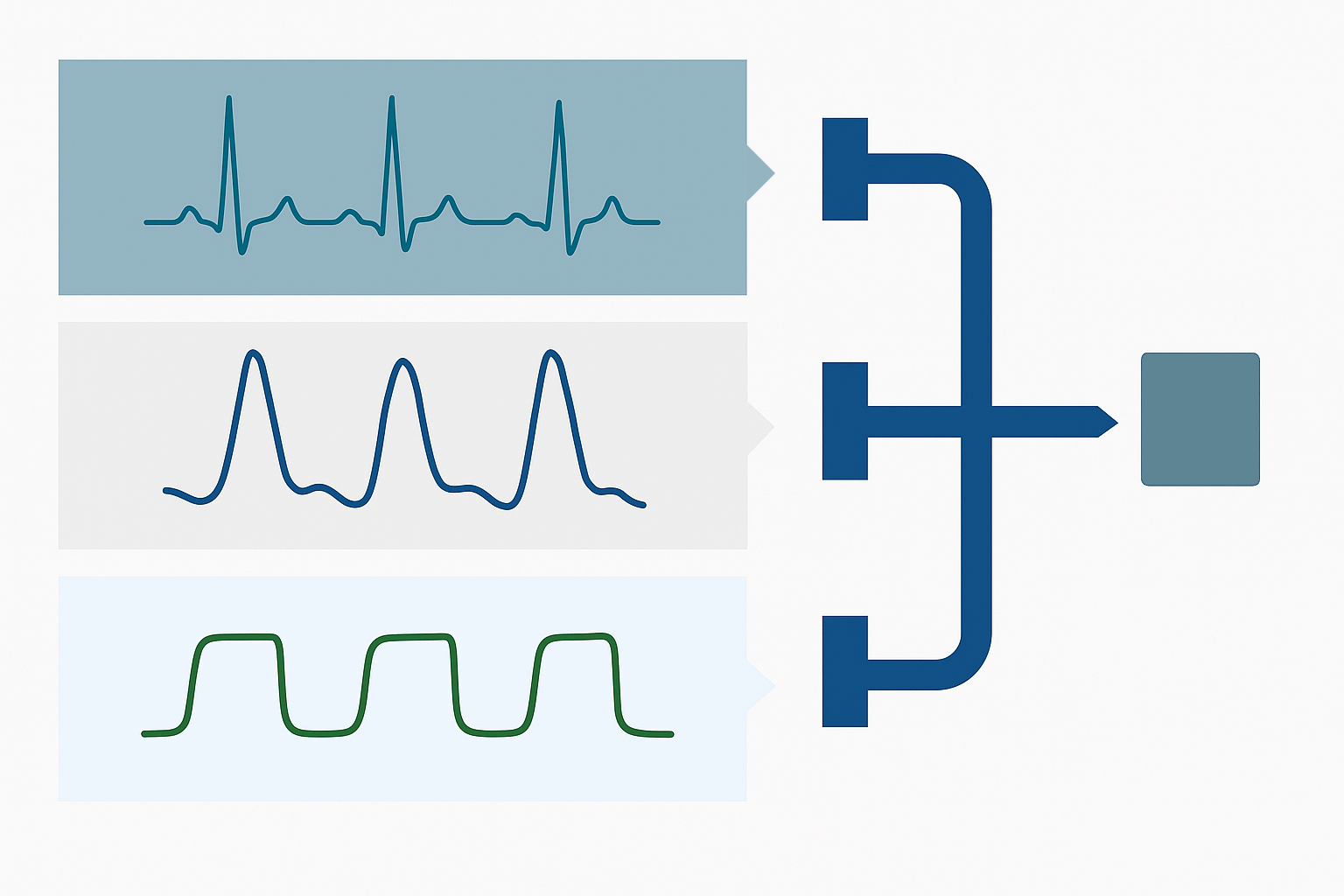

The SensorFusion API also provides the ability to process and fuse data collected from multiple sensors. For example, if you have a wearable or patient monitor with PPG and ECG signals, segments from the two sensors can be passed into the API at the same time and the interpretable values returned would reflect the physiological state of the subject through the powerful combination of those two sensors. For patient monitors, we support PPG, ECG, ABP, and CAP signals, and the API supports submitting any combination of one or more of these signals.